AI Protocols Are the New GTM Strategy

The real AI distribution play is happening under the surface, inside the connection layer.

Hi, I’m Kyle Kelly. Each week Line of Sight breaks down how AI, strategy, and revenue growth architecture turn complexity into leverage.

AI protocols are still evolving, but the early signs are clear: standardization is becoming strategy. Whether these layers converge or fragment, they’re already changing how software products distribute and compete.

AI is entering a new phase.

The race is no longer about building better models but about connecting them.

The systems that win will not be the smartest. They will be the ones that share intelligence most effectively.

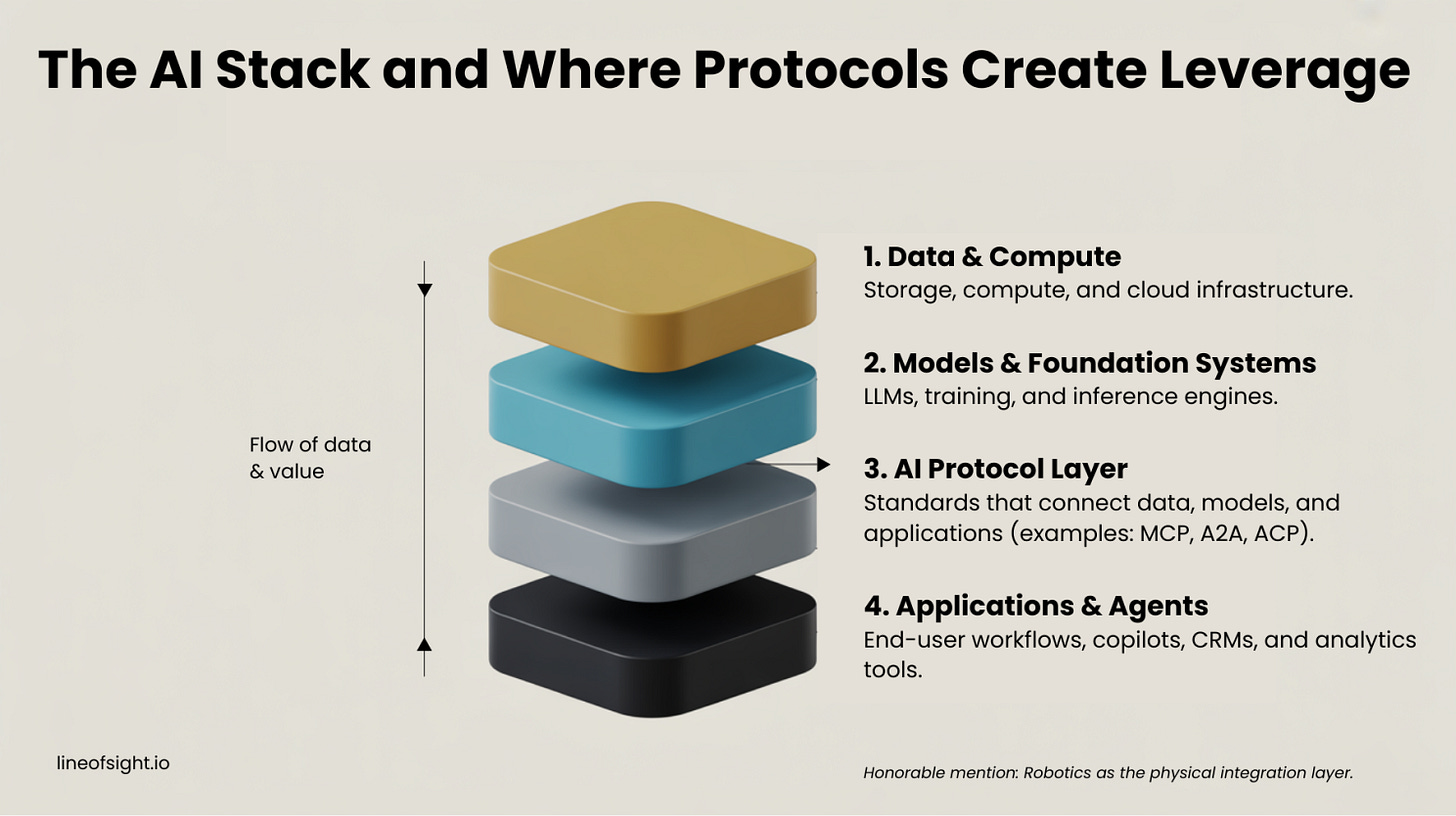

AI protocols are the emerging standards that let models, data, and applications communicate. They are doing for AI what HTTP did for the web and what Bluetooth did for devices.

These technical standards are quickly becoming commercial control points. The way your systems connect will determine how your product distributes, how partners integrate, and how revenue compounds.

The next go-to-market advantage will not come from your sales deck. It will come from your architecture.

Infrastructure as Distribution

When integrations become native, your product starts selling itself. Customer acquisition becomes a design problem, not a sales problem. That’s what makes protocols a GTM decision.

In the last decade, software companies built distribution through marketing, sales, and ecosystems. In the AI era, distribution begins at the infrastructure layer.

The standard that wins will influence three dimensions of commercial performance:

✅ Data distribution – how easily customer data can be activated by models to create personalized experiences.

✅ Intelligence distribution – how seamlessly insights from one system flow into another.

✅ Value distribution – how effectively companies and partners collaborate through shared AI systems.

Choosing an AI protocol is no longer a technical decision. It is a commercial one.

While protocols are technical by design, their adoption patterns mirror the dynamics of go-to-market strategy. They determine how products reach users, partners, and revenue streams.

Real-world signal: MCP adoption is moving from theory to platform-level integration.

The Commercial Story

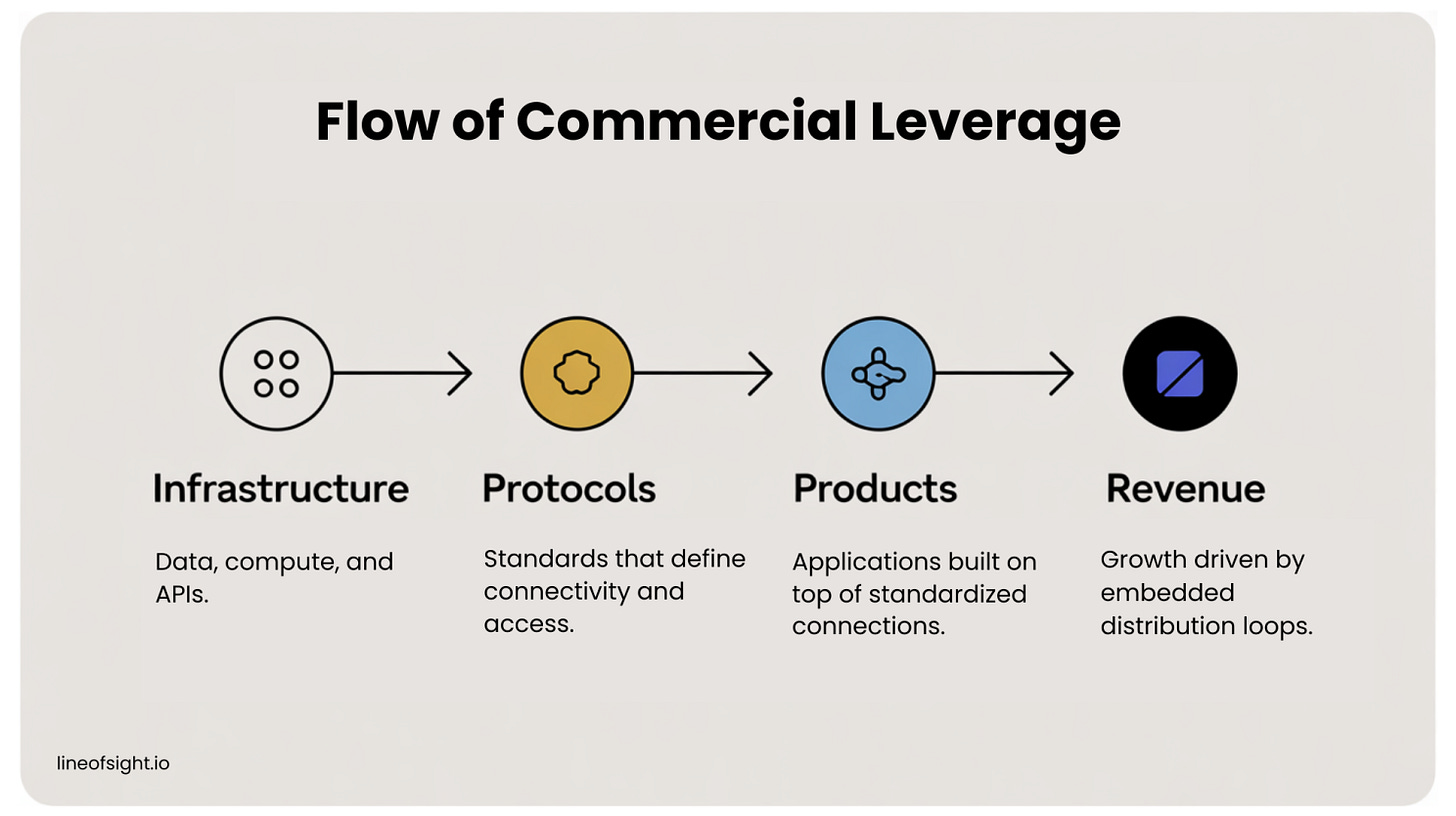

The rise of AI protocols marks a shift in how companies generate and distribute revenue. Distribution is moving from people and platforms to data and connectivity.

Each protocol creates a new form of commercial leverage. When systems integrate natively, products reach customers faster, acquisition costs fall, and onboarding becomes part of the product experience. Customer acquisition is no longer just a sales function. It is a design decision at the infrastructure level.

The companies that own these connection layers will control access to data, set the rules for interoperability, and define where value accumulates. In this new model, go-to-market strategy starts in the architecture of your stack. How your systems connect will determine how your business grows.

For GTM leaders, this shift has five major implications:

Revenue moves to the connection layer. Owning a protocol means capturing value every time a partner, customer, or agent interacts through it.

Customer acquisition costs compress. Integration replaces sales friction. Distribution becomes a feature of the product.

Partnership strategy becomes architecture. Your choice of protocol determines which ecosystems and partners you can access.

GTM execution becomes programmable. Agents and workflows operate across systems automatically, reducing manual sales motion.

CROs become system designers. The next generation of revenue leaders will build intelligent, interconnected go-to-market machines.

The Scale of the Market

According to MarketsandMarkets, the AI infrastructure sector is projected to grow from $136 billion in 2024 to nearly $400 billion by 2030.

These numbers highlight the scale of the opportunity. The companies building protocols are not only selling compute power or APIs. They are establishing control points for how data and workflows move across the AI ecosystem.

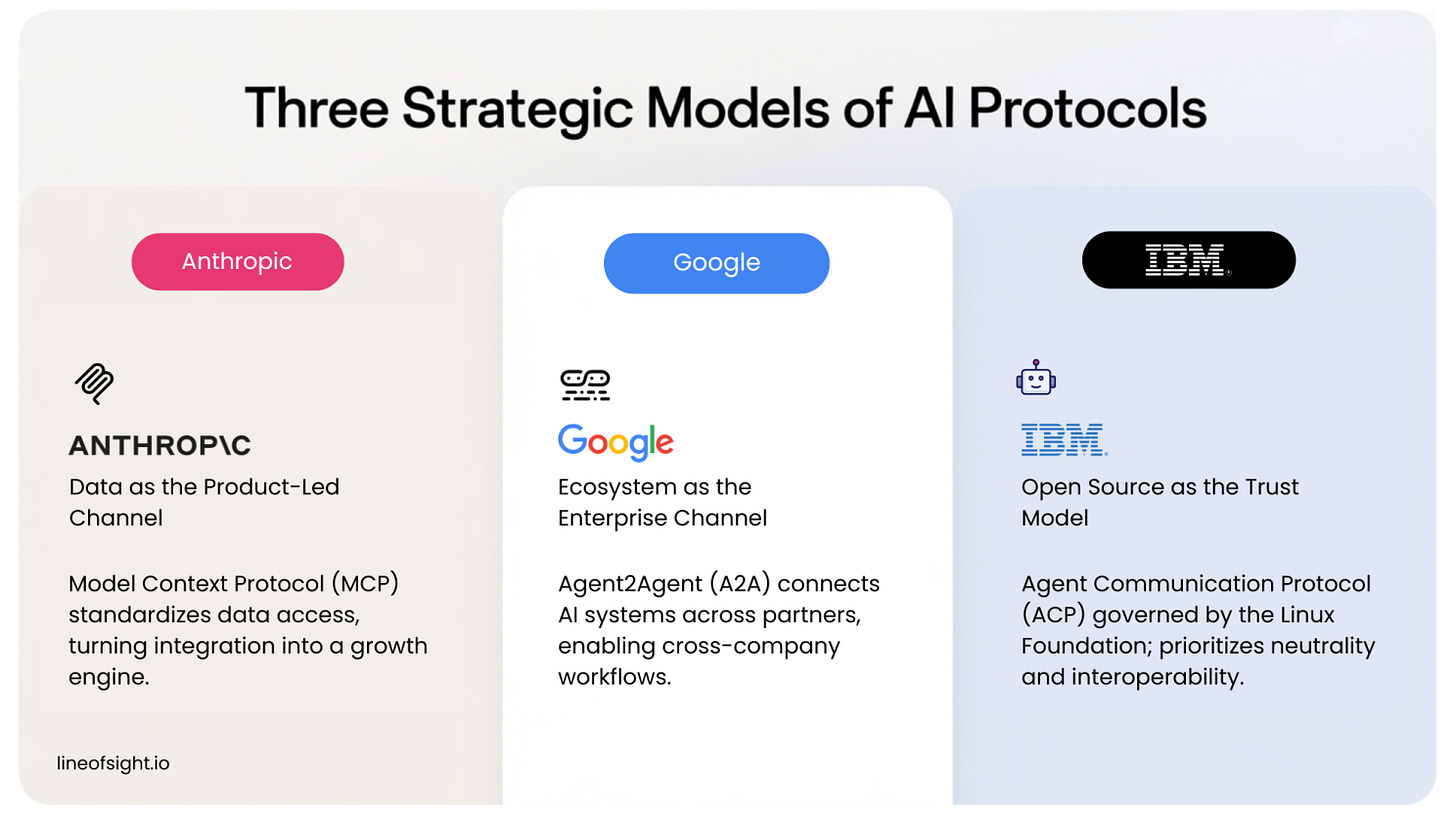

This is why Anthropic, Google, and IBM are taking different strategic approaches to protocol design. Each reflects a distinct go-to-market model and a bet on how value will accumulate.

Three Strategic Models

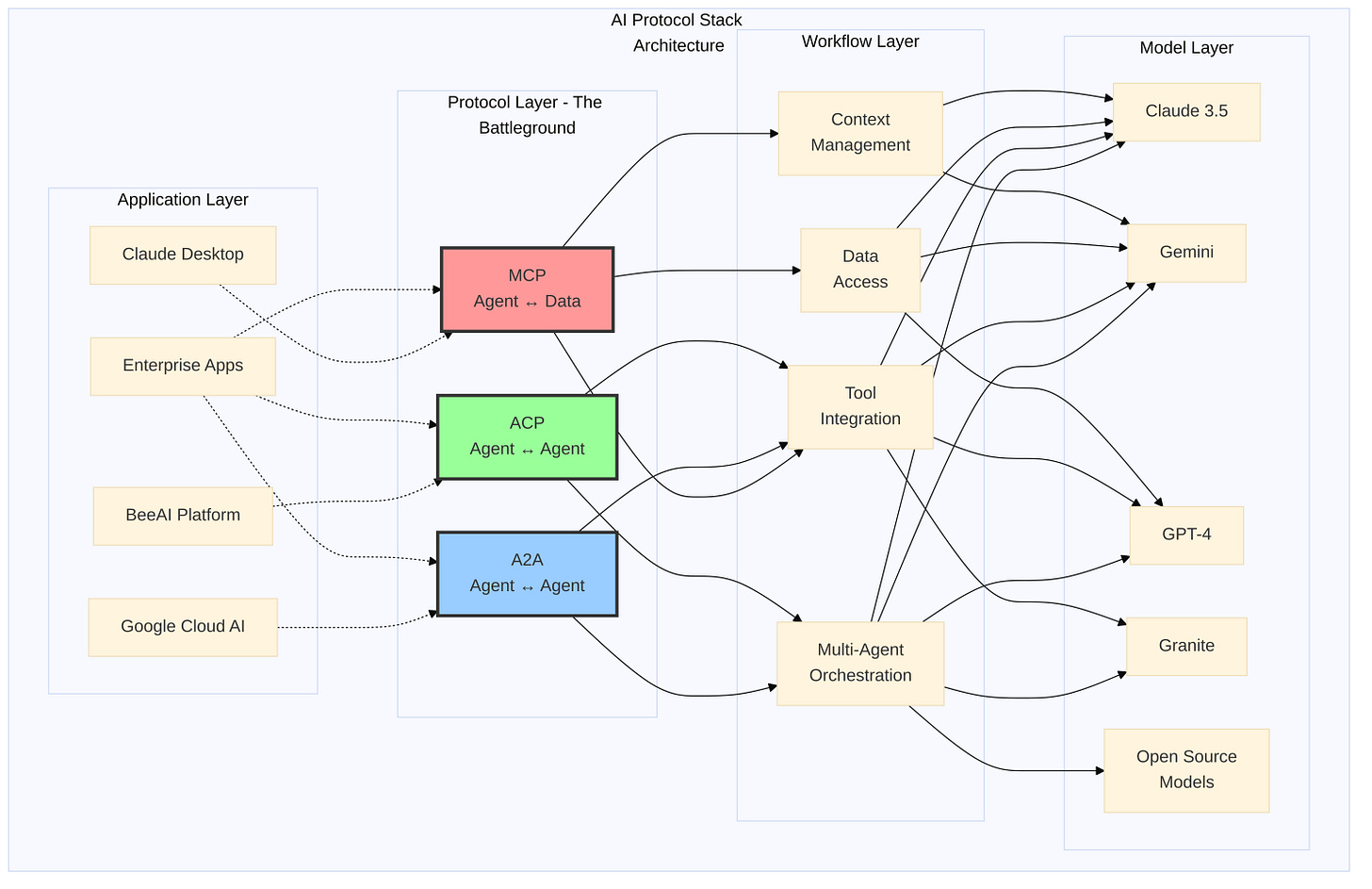

Three competing standards are emerging for how AI systems connect: one focused on data access (MCP), one on ecosystem collaboration (A2A), and one on open interoperability (ACP).

1. Anthropic: Data as the Product-Led Channel

The Model Context Protocol (MCP) standardizes how models access enterprise data. By simplifying integration, Anthropic turns data connectivity into a growth engine. Every new connection increases product usage and deepens customer engagement.

2. Google: Ecosystem as the Enterprise Channel

The Agent2Agent (A2A) Protocol connects AI systems across partner environments. Google launched A2A with more than fifty enterprise partners, creating a network effect similar to early cloud ecosystems.

For go-to-market teams, this model enables agents from different organizations to collaborate on shared workflows, expanding cross-company value creation.

3. IBM: Open Source as the Trust Model

The Agent Communication Protocol (ACP) is an open-source standard governed by the Linux Foundation. By prioritizing neutrality, IBM appeals to enterprises that value flexibility and interoperability. The open model trades short-term control for long-term trust and adoption.

The Commercial Operator’s Playbook

Revenue and operations leaders should treat AI protocols as part of their commercial architecture. Four actions can help guide this shift.

1. Re-evaluate core systems as data inputs. View CRM, marketing, and analytics platforms as data sources for AI systems rather than standalone tools. Identify where the most valuable commercial data resides and how easily it can be accessed.

2. Align protocol bets with your business model. Product-led businesses should focus on data activation protocols such as MCP. Channel-driven or partnership-led models align more naturally with ecosystem frameworks such as A2A. Organizations that prioritize long-term flexibility should consider open standards like ACP.

3. Quantify the return on infrastructure investment. Frame protocol adoption as a driver of revenue growth, not a technology expense. McKinsey estimates that AI-enabled go-to-market systems can generate up to 5x revenue growth and an 89% profit increase when deployed effectively.

4. Run targeted pilots. Start with one high-impact workflow. Examples include lead scoring that connects product data to CRM systems, partner deal orchestration across shared pipelines, or real-time personalization in marketing automation.

From Sales Leader to Systems Architect

The boundary between commercial strategy and technical architecture is disappearing. The design of a company’s AI stack increasingly determines its ability to generate, distribute, and retain revenue.

Future revenue leaders will think like system architects. They will understand how information flows across teams, how AI agents collaborate, and how protocols enable both. The next wave of market leadership will belong to organizations that master this connection layer and treat infrastructure as distribution.

Every major platform shift reshapes how markets form and how revenue flows. AI protocols represent the next of these shifts. They turn infrastructure into distribution and make connectivity itself the new competitive advantage.

In the years ahead, the companies that master these connection layers will not only build faster but sell smarter. Their growth will compound through systems that integrate, learn, and transact without friction.

The go-to-market strategies that win this decade will be designed, not deployed, because the architecture will matter as much as the message.

References

MarketsandMarkets (2024). AI Infrastructure Market Size, Share, Industry Report, Revenue Trends and Growth Drivers.

Anthropic (2024). Introducing the Model Context Protocol (MCP).

Google Developers (2025). Announcing the Agent2Agent Protocol (A2A).

IBM Research (2025). An Open-Source Protocol for AI Agents to Interact.

Subscribe to Line of Sight for insights built for operators like us.