Why Distribution Is the Real Moat in the AI Era

Hi, I’m Kyle Kelly. Welcome to Line of Sight, a weekly newsletter decoding how AI, capital, and strategy create leverage.

This week, I’ve been thinking about distribution, not as a marketing function but as the ultimate power layer of AI. Every company talks about models, but the real game is reach, control, and compounding access. I’ve watched this same pattern repeat across industries.

That’s exactly what’s playing out with OpenAI’s rumored Agent Builder launch. They’re not just building intelligence, they’re consolidating distribution. I break down how the moat is shifting and why the next decade of AI belongs to whoever controls the delivery system.

The Great AI Commoditization

AI is moving faster than any other technology cycle. Models that once took years to build are copied in weeks. Open source accelerates the trend, making frontier-level capabilities available to anyone with a cloud account.

The moat is not the model. It is not the algorithm. It is who controls the pipes.

Energy is a bottleneck, but we will save that for another day.

In the AI era, distribution is the moat.

The AI Ecosystem Stack: Where Value Really Lives

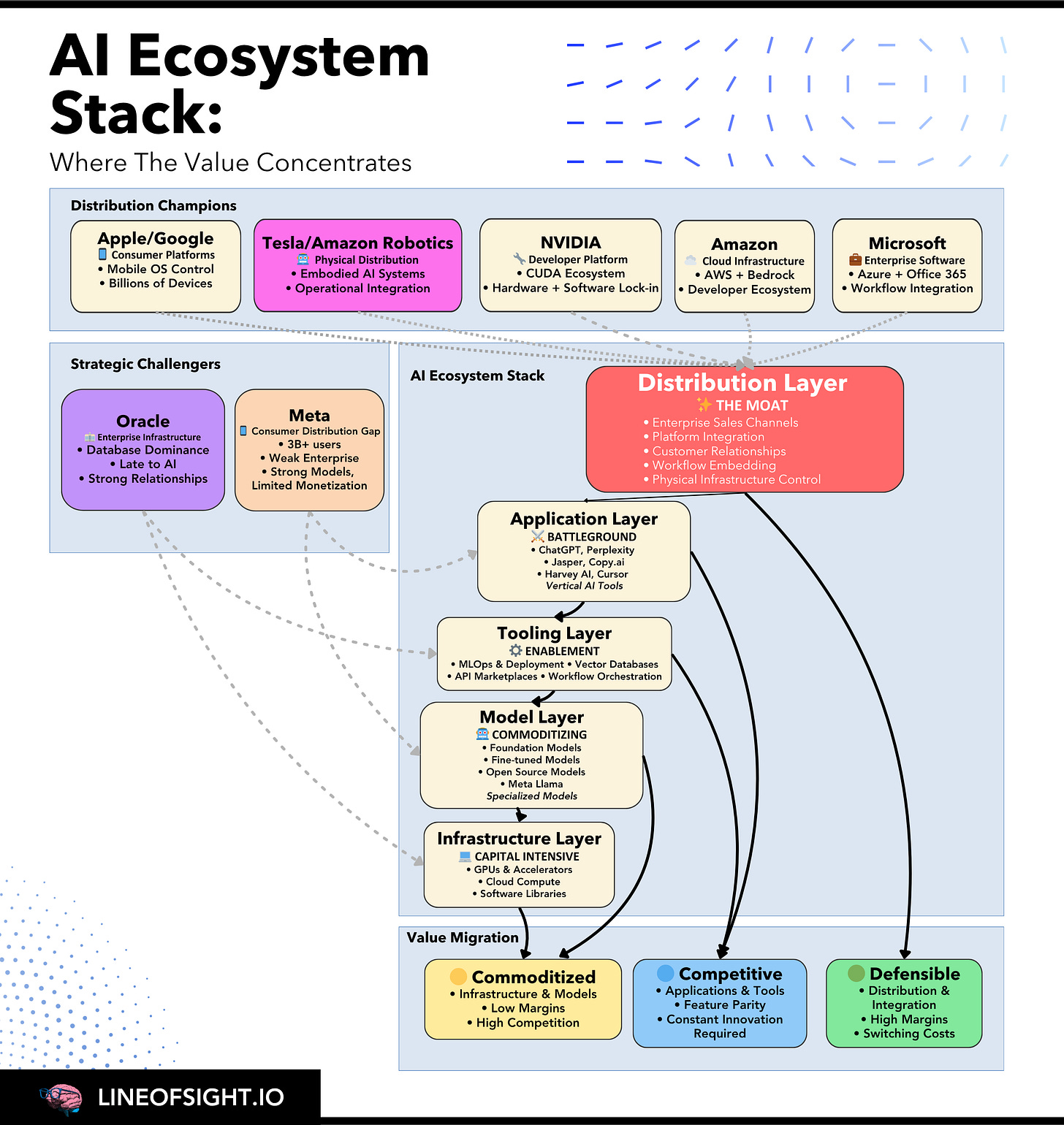

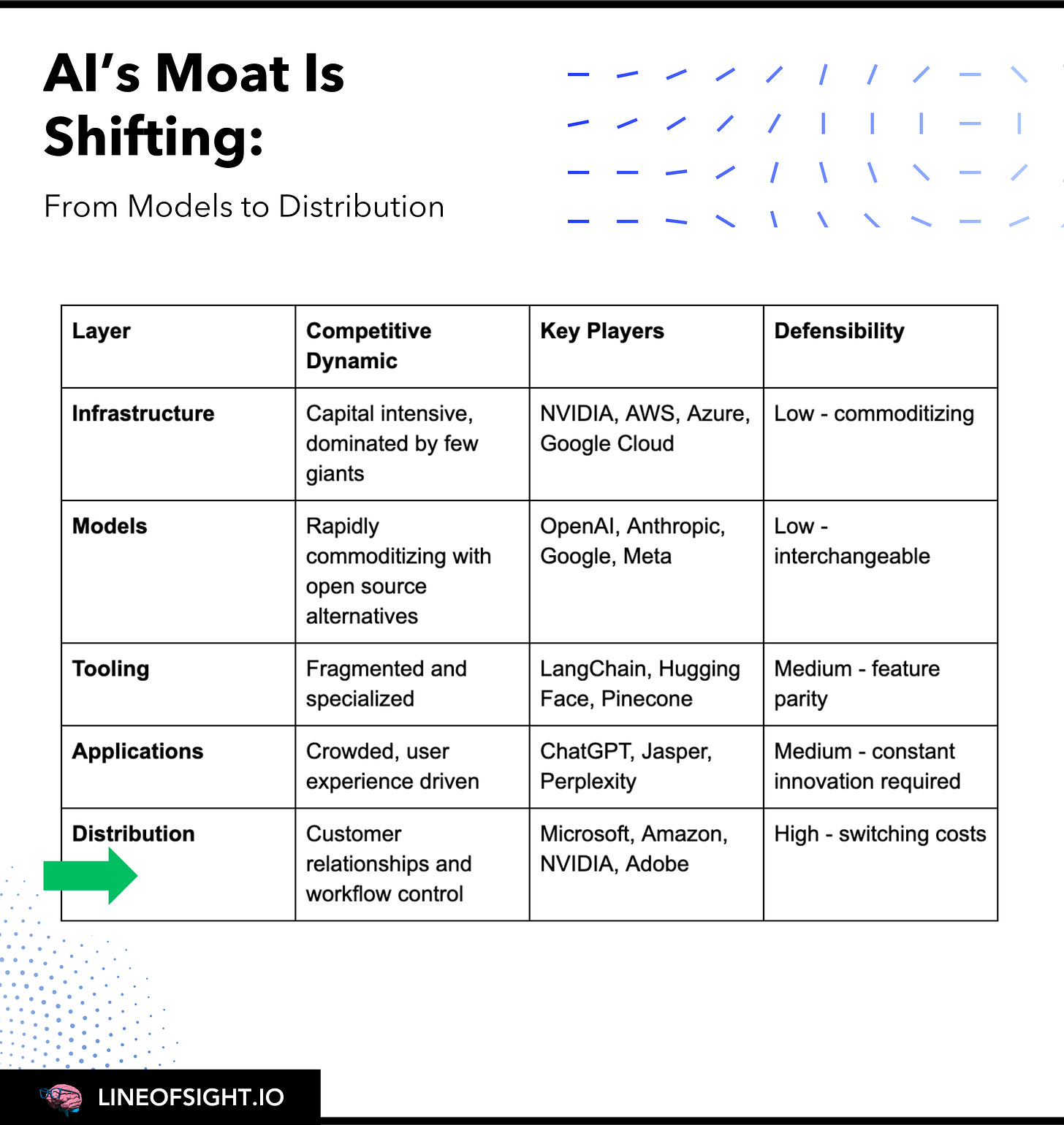

To understand why distribution creates the ultimate defensible advantage, we must dissect the AI ecosystem into its constituent layers. Each layer represents distinct competitive dynamics, and as we move up the stack, we see clear value migration away from commoditized infrastructure toward defensible distribution channels.

The AI value chain divides into five critical layers, with value migrating toward distribution where customer relationships and workflow integration are owned:

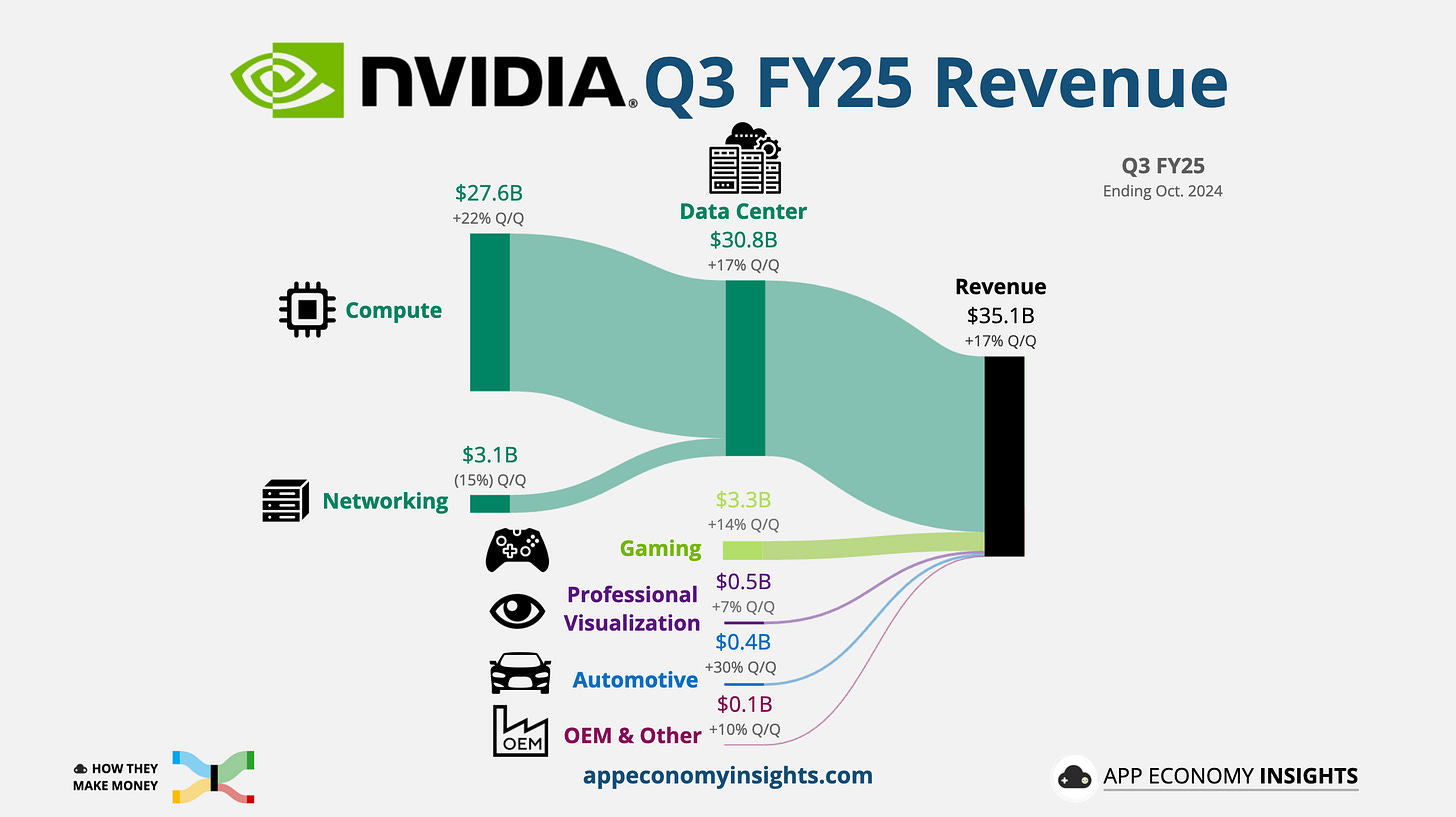

As the diagram illustrates, value migrates upward. The infrastructure and model layers are becoming increasingly commoditized. While NVIDIA currently enjoys a dominant position in the hardware market, its true moat lies not in its chips, but in the CUDA ecosystem that locks in developers. Similarly, while foundation models from OpenAI, Anthropic, and Google are incredibly powerful, they are also becoming increasingly interchangeable. The real battle for value is being fought in the application and distribution layers, where companies are competing to own the customer relationship and embed themselves in the workflows of individuals and enterprises.

The AI Ecosystem Stack

The AI value chain can be divided into five layers. Value is migrating upward, away from commoditized infrastructure and models, toward distribution where customer relationships and workflow integration are owned.

Layer breakdown:

Infrastructure: Capital intensive, dominated by a few giants (NVIDIA, AWS, Azure, Google Cloud).

Models: Rapidly commoditizing (OpenAI, Anthropic, Google, Meta, open source).

Tooling: Fragmented and specialized (LangChain, Hugging Face, Pinecone).

Applications: Crowded and user experience driven (ChatGPT, Jasper, Perplexity).

Distribution: The defensible layer (Microsoft, Amazon, NVIDIA, Adobe).

NVIDIA’s true moat is CUDA, the ecosystem that locks in developers. Foundation models are already becoming interchangeable. The real battle is in the workflows and channels that connect to customers.

The Distribution Champions

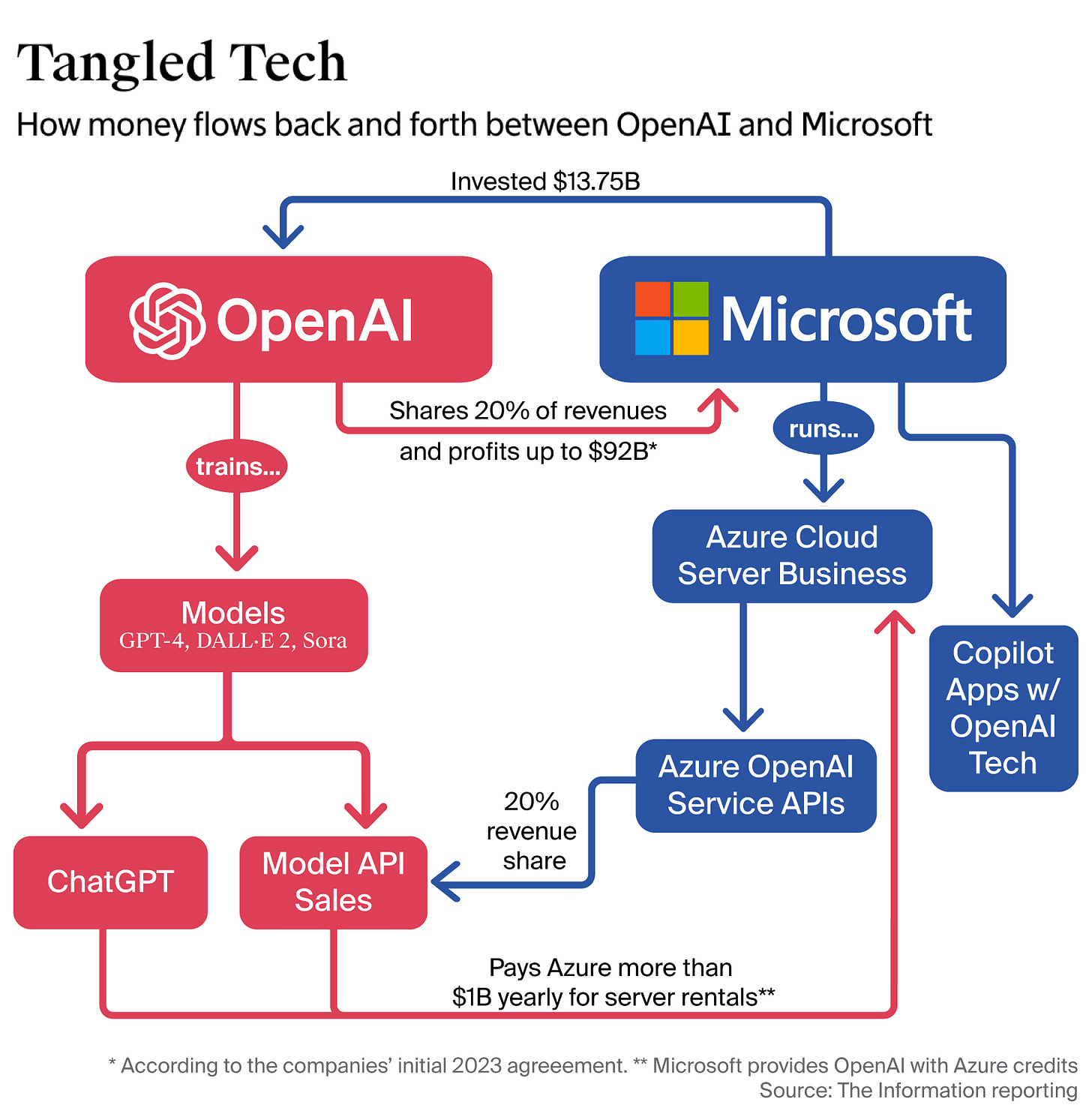

OpenAI + Microsoft

OpenAI plugged into Microsoft’s enterprise muscle. By embedding GPT into Azure and Office 365, it gained immediate reach to millions of enterprises. Distribution channels that would take decades to build were unlocked overnight.

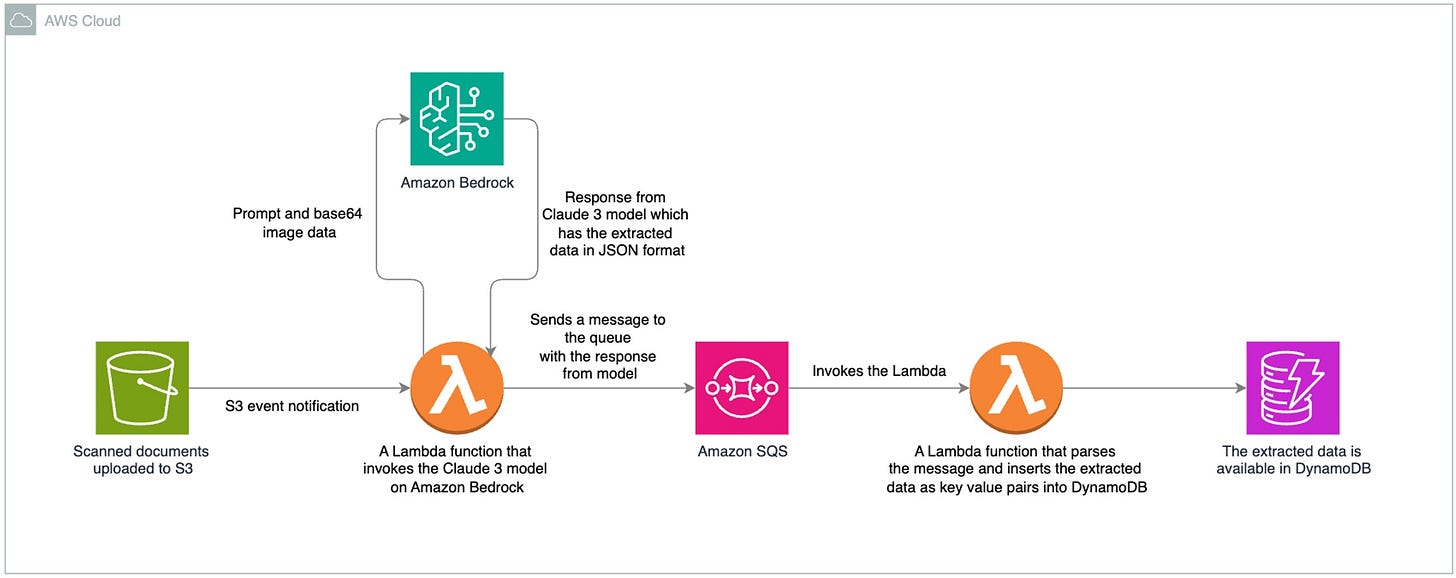

Anthropic + Amazon

Anthropic runs through AWS and Amazon Bedrock, backed by $8B of Amazon’s capital. This gave them both compute power and access to AWS’s developer and enterprise ecosystem.

NVIDIA

NVIDIA’s moat is CUDA. (What is CUDA?). Years of evangelism, education, and ecosystem building created a network effect so sticky that competitors with comparable hardware still cannot break in. As one analyst notes, “The reasons for NVIDIA’s dominance are years and billions of dollars in investment in the CUDA ecosystem, evangelism, and education of the community that builds AI”.

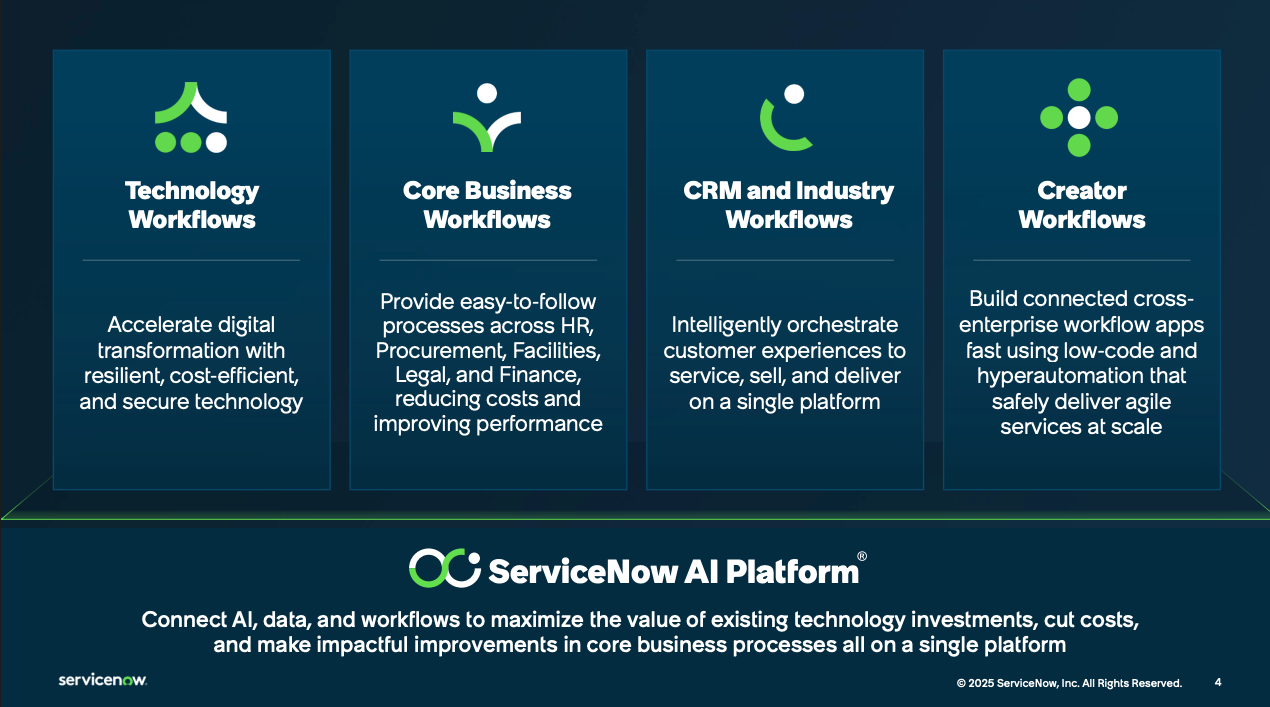

ServiceNow

ServiceNow is showing how distribution moats extend beyond hyperscalers. With deep workflow integration across IT, HR, and enterprise service management, its platform becomes the control point for automation. By embedding AI into workflows that enterprises already depend on, ServiceNow locks in adoption at the process level, not just the product level.

Distribution Reframed for the AI Cycle

Distribution has always been a moat. What is different now is the speed. Models commoditize in months instead of years. The companies that build first, iterate fastest, and distribute best will hold the durable advantage.

Product is the entry ticket. Distribution is the multiplier.

How to Evaluate AI Companies

When you look at an AI business, ask:

Who owns the customer relationship? Is it direct or through a partner?

How embedded is it in workflows? Is it a standalone tool or core to daily work?

What are the switching costs? Could customers swap within a week?

Is there a network effect? Does usage compound the moat?

These questions cut through hype about “better models” and show where defensibility is real.

The Next Frontier of Distribution

After distribution moats, we will see things evolve from digital to physical.

The next wave will form around three layers: AI agents that need channels to reach users, data pipelines that become essential infrastructure, and robotic systems that embed AI into physical operations.

Software distribution created switching costs through workflows. Platform distribution created lock-in through ecosystems. Physical distribution through robotics creates the ultimate moat - operational integration that spans both digital and physical worlds.

When Amazon’s robots become integral to warehouse operations, or Tesla’s manufacturing systems embed AI into production lines, the switching costs become orders of magnitude higher than any software solution. The customer relationship becomes nearly unbreakable.

The winners in AI will not be those who build the smartest models. They will be those who control the pipes - whether digital workflows, data infrastructure, or physical operations - that others must build upon.

The real question is not who builds the best AI. It is whose distribution channels are becoming indispensable to how work actually gets done.

This essay is part of Line of Sight, where I translate AI noise into operator strategy. Subscribe for frameworks and playbooks on growth, deals, and distribution moats.